Our first annual user conference, Effortless '23, gave us insights into how work is changing and the new chances this brings for the future. We delved into topics like AI & automation and the tools shaping the modern workplace.

A group of expert speakers, including startup creators, investors, product leaders, and support staff, gathered to explore innovative uses of AI and the latest tech advances.

At Effortless, we embraced the notion that tech enterprises, with their particular set of challenges, merited custom-made solutions. The event created a shared environment for discovering the ins and outs of AI and design principles aimed at simplifying numerous technological tasks.

During the conference, a highlight was the insightful keynote discussion featuring Vinod Khosla, an initial investor in OpenAI, alongside Ely Greenfield, Adobe’s Chief Technology Officer of Digital Media. They engaged in a thought-provoking dialogue about the broad trends shaping the future of artificial intelligence.

They delved into how these trends are influencing business strategies, impacting consumer experiences, and driving innovation across various industries. Their conversation provided a deep dive into the expansive role of AI in digital transformation and its potential for creating new paradigms in technology and beyond.

Vinod and Ely kickstarted their discussion with Multimodal GenAI, a concept quickly gaining importance in the future of artificial intelligence conversations.

What is Multimodal GenAI?

Multimodal GenAI refers to Generative Artificial Intelligence systems that can understand and generate outputs in multiple modes or forms, such as text, images, audio, and sometimes even video. These AI models can process and produce content that blends different data types, allowing them to perform more complex tasks than single-mode AI systems.

For instance, a multimodal GenAI might be able to take a written description of a scene and generate a corresponding image, or it could analyze an image and generate descriptive text about it. This versatility enables such AI systems to be used in various applications, from creating art to driving autonomous vehicles, where the integration of visual, auditory, and textual understanding is crucial.

Multimodal Generative AI systems are built on three components: it includes an input module, a fusion module, and an output module.

Input Module

Input is where different forms of data(text, image, video, audio) are processed and fed into the fusion module. There are unimodal AI systems as well - this means that the form of input and output are the same. At the input stage, the module extracts the features that are relevant for generating an output. In the case of a feedback loop, the input module will be able to derive context from the previous input as well as the output in an ongoing conversation.

Fusion Module

In the intermediate stage, the data fed is used against the models to perform various mathematical computations. Each different form of modality is broken down into information packets that go through a fusion mechanism. Specialized sub-models are designed to process different modalities. At this stage, the model can understand the relationship between different modalities. There are cases where additional information is extracted to refine the integration. This is now fed into the multimodal model.

Output Module

The output module presents you with actionable information in the desired form. Modality-specific processing is carried out at this stage. The modality of the output can be a combination of text, video, audio, or an image depending on the context. This module is also capable of error handling in case a certain input cannot be processed or if a certain failure occurs.

While this is the cycle of how the process works, the generated output can be then refined based on the user feedback or recommendations to achieve the desired output. The purpose of a certain model might be to convert text-based input to a consumable video while there can be models that are to be built to summarize your conversation using a transcript or an audio file.

The overall takeaway here is that the models are capable of processing information like humans do, but only faster. The models undergo continuous iterations to provide the output desired. Feedback plays an important role in fine-tuning the model that can be more accurate.

The use cases of multimodal generative AI can be found across businesses. This also adds to the concern to see if the right data is available. While people start trusting blindly the output received, there can be instances when this might take a wrong turn. The conversation between Vinod and Ely led to establishing how content authenticity needs to be achieved in the near short term and how copyright laws need to evolve. In a business context having data that is not generic becomes far more critical than ever.

A few applications of Multimodal Generative AI

Multimodal Generative AI is transforming work in numerous ways. Here’s how it can boost productivity and creativity in your business:

1️⃣ Enhanced communication:

From enhancing your writing to the creation of videos in no time, the way you communicate is changing drastically. If you were spending time reading through long pieces of text to find yourself lost, why not just summarize to see if you have any relevant information? Similarly, the use cases are unlimited from the creation of content to consuming more in less time.

2️⃣ Personalized user experience:

To be able to understand and design experiences based on personas across platforms is made possible by providing context to the models. Create personalized experiences with virtual assistants, and interface adaptation based on the requirements or personas. You might have been thrilled and shocked at the same time to see some of the ad recommendations on the social channels that you use, imagine bringing the same level of personalization to business applications.

3️⃣ Human-like customer support:

LLMs trained on domain-specific knowledge can act as the first layer of customer support. This reduces the constant need for human interference in solving common queries, and also the waiting time. A cost-effective solution that can enable you to provide a world-class customer experience. Couple this with the power of user data to make every action personalized. Machines are now capable of generating information in natural language and making it more human-like.

4️⃣ Generation of reports:

The mundane task of reporting can be automated by defining workflows specific to the requirements. You can create a dashboard that serves your business requirements or even ask the AI model to suggest a better view. Analyzing the data and reproducing it in an understandable format has been the most time-consuming aspect before delving into noticing the patterns that make sense for the business. Several of the manual tasks can be automated making room to work more efficiently.

5️⃣ Prioritization:

Helps provide context on the intent, and common trends to make decisions backed by logic. Teams can predefine the criteria, the level of effort required, the resources that need to be allocated, the workflows to follow based on the problem being solved, and finally machines can help define priorities and the allocation of work based on the available time. One of the major factors here is how productivity levels change from the advent of new technologies.

6️⃣ Designing and prototyping:

Text-to-visual design conversions assist you in getting started on complex designs and prototyping. Remember when you had to break your head trying to figure out the best design you could go with when creating a logo? Even though most of them still do, you have the opportunity to make use of multimodal GenAI to create visual designs with almost little to no effort. Creating A/B testing campaigns, increasing collaboration between designers, and learning more about design on the go are now possible.

Revolution of AI, creativity, and humanity

“There are going to be a billion bipedal robots over the next decade” - having invested in deep learning startups for over a decade now and OpenAI in 2019; Vinod talks about what the future holds. The trifecta of AI, creativity, and humanity brings together new opportunities and challenges.

The previous waves of the internet democratized access to information and publishing respectively. Now, AI is democratizing access to reasoning, analysis, and creativity. The opportunities are vast, and the important aspects of this boil down to being curious. The recent advancements have just made room to expand our abilities to help cut through the noise if used correctly.

Vinod also shared a fun story about using AI to create a rap for his daughter’s wedding. He thinks that our abilities will grow in new and unexpected ways. This shows that using technology creatively can lead to fresh solutions to problems.

We are held back by the past and tend to extrapolate to predict the future rather than invent for the future. This hampers creativity and our ideas are restricted to our bias to previous experiences. To be able to think outside the box is going to be a skill in itself.

The bigger challenges, and where future investments would be made?

Vinod expressed his strong opinion on how the copyright law on AI should be treated similarly to any other copyright law. The check of similarity does not start with the input but is judged based on the similarities. All the laws are made in a way to benefit society as a whole, this is how the laws are to be put in place when it comes to AI as well.

On the other hand, the competition with China was referred to as a techno-economic war, and the culture around open sourcing and transparency might have to change as it presents them with an opportunity to steal which is just a bad social idea.

Investing a lot more in safety research and detection of AI would be the next big thing to look at, and there is no way that international treaties could be created for AI like the nuclear/biological wars.

Also, Ely stressed content authenticity as another strong point of the conversation as to how this could be achieved in the short term by creating a verifiable chain of evidence that AI was not used. The two-pronged approach here is to have a verifiable, cryptographic way and to make it consumable. Society operates on trust, and enabling that would be more important.

In the concluding part of their keynote, Ely and Vinod wrapped up with a debate on the potential impact of AI on human drive. They touched upon the delicate balance between concerns that AI could restrict human creativity and the belief in its capacity to enhance it. Ultimately, they underscored the increasing importance of our humanity in the age of AI.

Changing the landscape of work with DevRev

The crux of this is how customer-centric companies become more relevant than ever. In the next decade, Vinod also predicted that there are going to be a billion programmers in the next decade. As programming will be in natural language, DevRev presents the opportunity to make it effortless.

The way we work is going to evolve by having machines do all of the manual tasks that we have been doing for a while now. The use of computer applications is going to be more like a conversation. The experience is going to be simpler every step of the way.

DevRev is a Copilot for your support teams, product teams, and growth teams. All of this comes together to help you make business decisions faster while working closely with your customers.

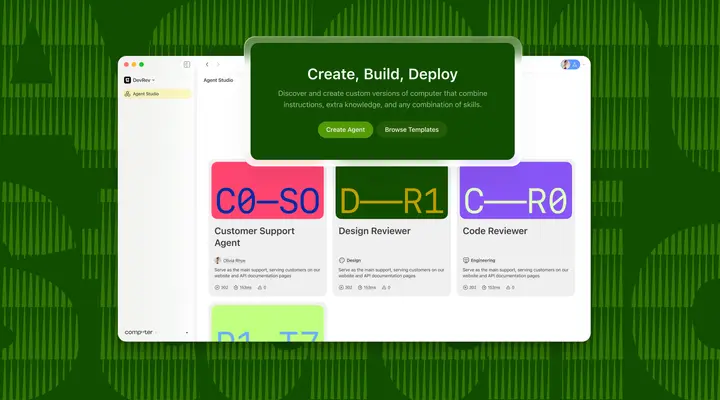

How is DevRev building the future of work?

Tech companies deserve to use AI tools that are tailored to their needs. We can see everyone claiming to be building in AI, while most of them are using generic datasets that in no way benefit your business specifically.

DevRev enables you to build your LLM with your data to enable the machines to communicate the way you do. Your AI workflows can now be more human with domain-specific knowledge and can interact intelligently with natural language.

Our OneCRM acts as a source of truth to all teams in an organization, you will be able to achieve a large business impact and grow faster. The platform enables you to be customer-centric by streamlining and prioritizing activities, bringing information symmetry, and helping in converging teams.

Experience the power of unifying teams on a single platform to gain more visibility into the work being done, change focus based on priorities, and bring the customer and your team together every step of the way.

We believe the trifecta of data, design, and AI come together in our core products to build intelligence, detect the intent, and facilitate business functions. To capture data effectively is important for any organization to analyze and then strategize its next move.

The experience of working on a platform that hosts multiple teams has to be seamless and the workflows defined perfectly, here the design plays an effective role. Combining this with the power of AI can be a game-changer for the way you work.

From a provision to gain enough context based on your view to be able to summarize makes it your copilot. You can learn more about our core competencies here.